Will AI Fix Bangladesh’s Inequality or Automate It?

AI systems don't operate in a vacuum. They operate on people and amplify the society beneath them. That brings us to the uncomfortable question at the heart of Bangladesh's AI future: if we deploy these systems on top of our existing inequalities, do we fix them or automate them?

This is the final article in a four-part series that began with Bangladesh’s AI Moment and What Bangladesh’s AI Policy Must Get Right which were were written before the UNESCO Bangladesh Artificial Intelligence Readiness Assessment Report 2025 was published. Those pieces examined vision and policy from first principles.

Part 3 explored the institutional infrastructure required for implementation.

When the UNESCO report arrived, it completed the logic. It showed not just what Bangladesh aspires to build, but what the full arc requires and what comes at risk if we build without addressing who benefits and who gets left behind.

AI systems don't operate in a vacuum. They operate on people and amplify the society beneath them. That brings us to the uncomfortable question at the heart of Bangladesh's AI future: if we deploy these systems on top of our existing inequalities, do we fix them or automate them?

The UNESCO report answers that question with data, not theory.

Unless we act deliberately, the AI transition will deepen our divides rather than bridge them.

The Analog Divide

AI for All is an inspiring slogan. But the UNESCO report shows reality looks different.

Only 44.5 percent of Bangladesh's population uses the internet. Urban usage stands at 66.8 percent. Rural usage is just 29.7 percent. Gender gaps persist everywhere. In rural regions, 36.6 percent of men are online, compared with only 23 percent of women. Frequent rural power outages, sometimes 7 to 8 hours per day, make digital participation unreliable.

If half the country is offline, what exactly do AI-enabled public services achieve? We cannot build a high-tech national superstructure on an analog foundation. That is not inclusion.

Data Sovereignty and Cultural Erasure

The report highlights a deeper problem. Bangladesh does not control the data that will shape its AI future.

Bengali datasets remain sparse. Open-source corpora are limited. The result is predictable: AI systems trained in Bangla produce output that is weak, inaccurate, or culturally distorted. UNESCO cites one case. An AI-translated mathematics textbook that produced distorted and culturally inappropriate content. The report doesn't specify what went wrong, but the pattern is clear. AI systems trained on dominant languages fail systematically when applied to Bengali contexts.

Minority languages fare worse. Many lack written corpora or even standard fonts. Deliberate investment in language datasets will exclude these communities from the digital future, not out of malice, but through neglect. Algorithmic silence.

The roadmap's call for data sovereignty and curated datasets in Bangla and minority languages may be the single most crucial long-term recommendation in the entire report. AI systems do not produce neutral output. They reproduce whoever showed up in the training data (and whoever was left out).

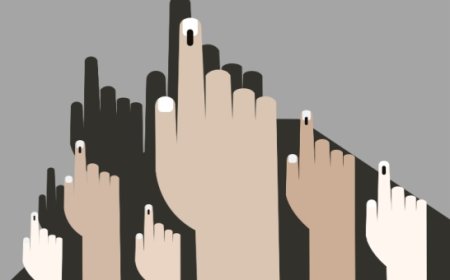

The STEM Gender Gap

One of the more quietly worrying figures in the UNESCO report is the STEM gender gap. Out of all tertiary graduates in Bangladesh, 12.39 percent of men earn degrees in STEM fields. For women, that number is 8.24 percent.

This creates a double exclusion. As AI spreads through agriculture, manufacturing, health, finance, and the public sector, women risk being underrepresented among the people who build these systems and underprepared among those expected to use or supervise them. When the builders of the future disproportionately look like one half of the population, the biases inside those systems silently reproduce that imbalance.

Bangladesh's AI future will not be limited by talent. It will be limited by who we allow to participate in its making. If we do not bring women into STEM, into AI literacy, into digital access, and into the public conversations about technology, the systems we build will inherit our blind spots.

The tragedy is not that women will be replaced by AI. The tragedy is that they will be excluded from shaping it.

The Choice Before Us

UNESCO ends on a note of fundamental optimism. I share that view. But optimism only matters if paired with agency.

If we close the analog divide, build sovereign datasets in Bangla and minority languages, and bring women into AI's design and deployment, Bangladesh can build systems that reflect the full population. If we do not, Smart Bangladesh will be a digitally upgraded version of the same exclusions.

AI will reshape Bangladesh. The question is whether that reshaping widens inequality or narrows it. That outcome is not inevitable.

It is a choice but the window to make that choice is narrowing.

Dr. Zunaid Kazi is an AI visionary and entrepreneur who has spent over 30 years turning complex ideas into intelligent systems.

What's Your Reaction?