Can We Critically Look at People’s Movements?

If states tighten control over digital spaces to prevent manipulation, how do democracies function? How do we distinguish between organic, bottom-up people’s movements and those that are partially orchestrated or externally influenced?

It can now be reasonably argued that we are living in a Gen Z mass protest era.

From Bangladesh and Nepal to Iran and beyond, the world is witnessing large-scale street mobilizations demanding reform, freedom, dignity, and social justice.

These movements are often driven by younger generations confronting stagnant economies, shrinking opportunities, and political systems that have failed to deliver equitable outcomes.

In that sense, the anger and urgency behind these protests are both real and understandable.

However, acknowledging the legitimacy of popular grievances does not mean suspending critical inquiry.

In fact, the scale and frequency of contemporary mass movements raise new and uncomfortable questions -- questions that did not exist in earlier eras of protest.

One defining feature of today’s protest landscape is the centrality of digital platforms. Social media is no longer merely a tool for expression; it is an infrastructure for mobilization.

Narratives are shaped on Twitter, Facebook, TikTok, and Telegram before they ever reach the streets.

This has created a new political reality where perception, virality, and algorithmic amplification often matter as much as -- if not more than -- traditional political organization.

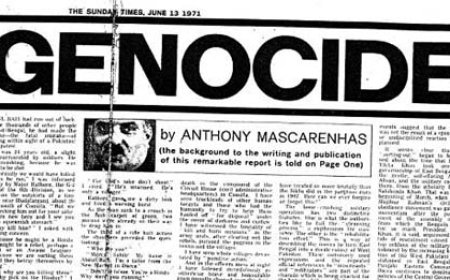

Within this environment, the idea of regime change operations has entered popular discourse. Podcasts, online commentators, and investigative reports increasingly discuss how political movements can be influenced, accelerated, or distorted through digital propaganda, coordinated messaging, and foreign or partisan interests.

What was once the domain of intelligence agencies and academic journals has now become part of everyday political conversation.

The July Movement in Bangladesh must be understood within this broader global context.

Undoubtedly, it was a mass movement. People from different social classes, professions, and political backgrounds came out onto the streets. The anger was genuine, and the participation was real. To deny this would be intellectually dishonest.

At the same time, it is equally dishonest to pretend that the movement existed in a digital vacuum. The widespread discussion of “bot bahini” after July did not emerge randomly.

It reflected a growing public awareness that online ecosystems can be manipulated -- through fake accounts, coordinated campaigns, selective outrage, and narrative engineering. Whether these attempts were fully successful is beside the point.

The fact that such orchestration was attempted, or at least suspected on a large scale, should concern any serious observer. In Bangladesh, the Iranian protest has elicited diverse opinions among the population. While a significant portion of the population supports the government’s crackdown on the protest, expressing approval for the measures taken to restrict internet access and suppress the demonstrations, it is perceived as a victory against colonial interference in the country’s sovereignty.

Consequently, the circumstances under which digital shutdowns are deemed acceptable and when they are not have become uncertain and ambiguous. It appears that the moral standing and the underlying narrative behind such actions serve as the primary determinants of whether they are considered just or unjust.

Why does this matter?

Because if political movements can be artificially amplified or strategically steered through digital means, they cease to be purely domestic phenomena. They become potential tools in geopolitical competition.

In such a scenario, social unrest is no longer just a reflection of internal failures; it becomes a security vulnerability that external actors can exploit.

The torching of The Daily Star office, following online mobilization by digital commentators such as Pinaki and Ilias, sharpens this dilemma further. It forces an uncomfortable question: What separates a people’s movement from a mob?

During the July Uprising, the same digital voices urged people to take to the streets to challenge the establishment -- calls that were widely seen as legitimate resistance.

Yet when similar appeals later directed crowds against alleged “enablers” of that establishment, the results were destructive, not emancipatory. Institutions were attacked, and moral clarity gave way to fear.

If the method remains the same but only the target changes, how do we decide what is legitimate? When does mobilization stop being protest and start becoming punishment? In an age of instant digital amplification, this line matters -- because once a crowd is called onto the streets, it is rarely possible to control what follows.

During the Hong Kong protests, Chinese state media portrayed the demonstrations as foreign-backed riots, while China-linked networks simultaneously flooded global platforms such as Twitter and Facebook with coordinated pro-Beijing content.

Social media companies later confirmed and removed hundreds of state-linked accounts that used fake personas, deceptive identities, and targeted messaging to shape international opinion. The episode revealed how modern states now deploy large-scale, organized digital propaganda -- using bots, paid commentators, and covert advertising -- to influence narratives far beyond their borders.

This concern is not exclusive to Bangladesh. In India, it has been debated how private satellite internet systems, such as Starlink, could pose national security risks due to data sovereignty and surveillance concerns.

China has long maintained a tightly controlled digital ecosystem precisely to prevent external influence and internal destabilization. Starlink is prohibited from operating there.

During the recent Iranian protest, Iran activated a multi-layered digital suppression campaign that, within hours, degraded Elon Musk’s Starlink satellite internet service from functional connectivity to what engineers described as a “patchwork quilt” of intermittent access.

According to Filter.Watch, an Iranian internet rights monitoring group, packet loss in Tehran surged from 30% to over 80%.

This marked the first verified instance of a nation-state successfully neutralizing Starlink at a national scale during an internal political crisis. With hardware and software support provided by China and Russia, it was successful in neutralizing Starlink’s internet service.

Official assessments of electoral interference in the United States have already established that elections were not insulated from manipulation.

Foreign influence operations, domestic disinformation campaigns, and the growing use of AI-generated content and social bots all played a role in shaping public opinion, deepening social divisions, and eroding trust in democratic institutions. US intelligence agencies publicly concluded that foreign adversaries -- most notably Russia and Iran -- had actively sought to interfere in the electoral process.

These efforts took multiple forms. State-linked actors disseminated propaganda and misleading narratives across major platforms such as Facebook, Instagram, X, and Telegram. Networks of fake accounts, trolls, and automated bots amplified polarizing content, targeted specific communities, and exploited existing racial and political fault lines.

In some cases, influence campaigns were tailored toward particular voter blocs, including Black voters, or directed against specific political campaigns, using divisive and negative messaging to suppress turnout or inflame distrust.

Against this backdrop, the United States itself moved to reassert control over its digital information space. TikTok’s operations in the US were placed under a new ownership structure, with a majority-American board overseeing a separate domestic entity.

Following the conclusion of the deal, TikTok revised its terms of service for US users, shifting contractual responsibility to the newly formed TikTok USDS Joint Venture and introducing stricter rules, including limitations on access for users under the age of thirteen.

For a country that long positioned itself as a global advocate of free expression and open digital ecosystems, the move was striking. It reflected a clear lack of trust in a Chinese-owned social media platform operating without state oversight, and came close to what many observers described as a forced or hostile takeover.

Iran, facing repeated cycles of protest and unrest, was by this point openly debating—and in some cases implementing -- similar measures to assert state control over its digital infrastructure.

During the 2016 Brexit referendum, coordinated social media activity -- including bot networks, targeted advertising, and algorithmic amplification -- was used to intensify polarization and influence public opinion.

Post-vote analysis identified thousands of bot-like accounts that aggressively promoted Leave narratives before vanishing, highlighting how digitally engineered mobilization can shape major political outcomes.

These examples illustrate a global trend: States are increasingly viewing digital autonomy as a core component of national security. Concepts such as state-controlled AI, data localization, and platform regulation are no longer theoretical -- they are policy priorities.

Yet this brings us to the most difficult question of all. If states tighten control over digital spaces to prevent manipulation, how do democracies function? How do we distinguish between organic, bottom-up people’s movements and those that are partially orchestrated or externally influenced? At what point does legitimate dissent become “destabilization,” and who gets to decide?

There are no easy answers. This is uncharted territory. The tools that empower citizens to organize are the same tools that allow narratives to be hijacked. Blind faith in “the people” is as dangerous as blind faith in the state. Democracy has always required skepticism -- not cynicism, but skepticism -- towards power in all its forms.

This is precisely why the July Movement must be examined critically, not emotionally. Critical examination does not delegitimize protest; it strengthens political maturity.

Asking uncomfortable questions is not betrayal -- it is responsibility. Societies that refuse to interrogate their own movements risk repeating the same cycles of manipulation, disappointment, and instability.

As Bangladesh moves toward another election, social media is already shaping political opinion in visible ways. The term “bot bahini” has entered everyday language, reflecting a growing public awareness that online narratives may not always be organic.

The question, however, remains unresolved: How do we know whether political discourse is being engineered by vested foreign interests rather than emerging from genuine public sentiment?

More importantly, what safeguards exist to detect, regulate, or resist such manipulation?

History shows that revolutions are rarely as pure as they appear in the moment. They are complex, contested, and often shaped by forces invisible to the crowd on the street. Pretending otherwise does not protect democracy -- it infantilizes it.

If we choose to shut down debate in the name of moral certainty, we may feel righteous today. But sooner or later, we will face the consequences of not having asked the hard questions when we had the chance.

And by then, it may be too late.

Muhaimen Siddiquee is a brand and communications professional with a strong interest in culture, politics, and history. An IBA graduate, he applies insights from consumer behaviour to understanding shifts in society and historical changes.

What's Your Reaction?